Understanding and Addressing AI Bias

The same biases baked into all our systems are getting baked into AI

by Suhlle Ahn

Whether you’re using AI software to screen candidates in HR recruitment or to read mammograms, there’s no escaping the AI revolution. Nothing screams “it’s here!” more than the recent buzz around ChatGPT.

But as AI use skyrockets, if you care about inequality and understand how bias can contribute to it, you need to sit up and take notice. It’s time to start paying attention to where and how AI—specifically, machine learning—is being used in your work and life.

And if you identify with an underrepresented group—or if you’ve advocated for any—then rest unassured. Somewhere in the world of AI, individuals from these groups are being under-selected, deprioritized, filtered out, discarded as sub-optimal, or rejected as outside the normal range, according to some algorithmic rule.

Sounds hyperbolic?

If you’ve learned anything about unconscious bias and how it makes its way into the systems we create as humans, you may disagree.

If you’re aware that ALL human beings rely on cognitive shortcuts, because it’s how our brains navigate the world; if you’ve learned that we’re ALL prone to errors of bias—no matter how good our intentions—then it won’t be a stretch to grasp how computer operations designed to mimic a certain kind of human decision-making—but at exponential speeds and capacity—also have the potential to pump out biased and erroneous conclusions, on steroids.

In fact, many data scientists are showing how the same biases that creep their way into all social systems are creeping their way into every aspect of AI that has been “touched” by the human hand.

That’s pretty much all of them: the data computers are “fed” and trained on; the algorithms programmers design; even the categorical logic basic computer programming relies on.

It’s because, at base, there’s no such thing as AI that hasn’t been set in motion by the human hand (and brain) as a kind of prime mover.

This is the point NYU Data journalism professor Meredith Broussard makes when she sounds the alarm in her new book, More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech:

“All of the systemic problems that exist in society also exist in algorithmic systems. It’s just harder to see those problems when they’re embedded in code and data.”

Broussard has even coined the term “techno chauvinism” for the presumption “that computational solutions are superior, that computers are somehow elevated, more objective, more neutral, more unbiased.”

Understanding AI Bias

To step back, what exactly IS AI bias? Or what is the kind of AI bias that most people sounding the alarm are concerned about?

There are many categories, and, actually, ChatGPT can give you a pretty good list, if you ask it to. (Try it and see!) But the main kind that has drawn attention is algorithmic bias.*

Algorithmic bias occurs in the context of machine learning, which itself is a subcategory of artificial intelligence.**

But let’s take one more step back and start with the definition of an algorithm.

I like this one by Vinhcent Le, Senior Legal Counsel of Tech Equity at greenlining.org:

“An algorithm is a set of rules or instructions used to solve a problem or perform a task.”

More specifically, the kinds of algorithms most concerning for their ability to produce discriminatory outcomes are those “designed to make predictions and answer questions.”

Another term for this kind of algorithm is an “automated decision system.” I find this helpful, because it contains a description of what lies at the heart of this article: decision-making.

Essentially, these algorithms take the human out of human decision-making. They turn a decision into a computational operation. But this happens only after a human decision-maker has set up the rules telling the computer how to make that decision.***

Taking Le’s definition, algorithmic bias occurs when:

“an algorithmic decision creates unfair outcomes that unjustifiably and arbitrarily privilege certain groups over others.”

Examples of these unfair outcomes are already much-documented. Le gives a comprehensive list from some key fields, including healthcare, employment, finance, and criminal justice.

Broussard describes examples at length in her book.

But perhaps the most widely known example is bias in facial recognition software.

One of the early voices to expose this problem was Canadian computer scientist, Joy Buolamwini. Her documentary, Coded Bias (or see her TEDx talk) tells how, as a computer science student at MIT, she discovered that facial recognition software could not even “see” her, because it could not detect her darker skin color.

Yet even when calibrated to be able to, facial recognition software is error-prone—often along racial, gender, and age lines.

Broussard cites this statistic, based on a 2019 report:

“[C]ommercial facial recognition systems…falsely identify Black and Asian faces 10 to 100 times more often than white faces. Native American faces generated the highest rate of errors. Older adults were misidentified ten times more often than middle-aged adults.”

It’s also known to misidentify women more often than men—especially Black women. (See AI systems identifying Oprah Winfrey, Michelle Obama, and Serena Williams as male, and see this article by Michael McKenna for more examples of AI bias).

The Hows and Whys

How do such blatant failures like these occur?

The causes are numerous and overlapping. But three common ones are:

Underrepresentation in training data sets. EXAMPLE: facial recognition software fails to detect dark skin because the face samples used to train the computers are mostly light-skinned. Or it misidentifies women, older people, and Asians, because the training samples are mostly younger males with European features.

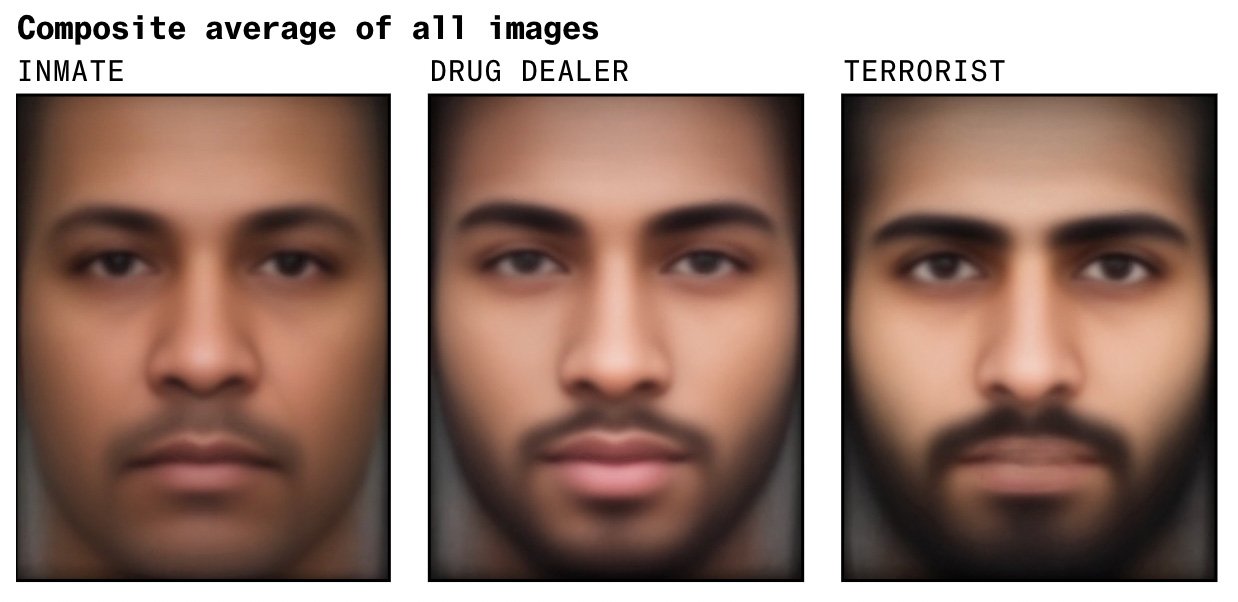

Biases embedded in the data computers are trained on. EXAMPLE: When prompted to generate an AI “terrorist,” Stable Diffusion’s text-to-image generative AI creates a composite male face that looks Middle Eastern. It uses raw data from a database of over 5 billion images pulled from the internet “without human curation” (i.e., it’s full of stereotypes). It similarly shows a Black male composite face when prompted to generate an “inmate” or “drug-dealer.” And a white male face when asked for a “CEO.”

Badly-designed algorithms that use some type of data as a proxy for something else. EXAMPLE: zip codes used as a proxy for a loan applicant’s “risk level”. Or dollars historically spent on healthcare as a proxy to predict who will need more/less healthcare in the future.

Image: Bloomberg.com, “HUMANS ARE BIASED. GENERATIVE AI IS EVEN WORSE” (https://www.bloomberg.com/graphics/2023-generative-ai-bias/)

As mathematician Cathy O’Neil, author of Weapons of Math Destruction, explains, any and all of these can lead to dangerous feedback loops that can perpetuate these biases and inequalities at an exponential scale.

These are some of the hows—i.e., some technical explanations. But behind these are also the whys.

If you’ve followed Tidal Equality, you know we talk about how small groups of homogeneous people working in a bubble can lead to the perpetuation of biases.

2022 Munich Security Conference (MSC) “CEO Lunch”

Image: Michael Bröcker/The Pioneer/dpa/picture alliance

If you’re aware of this, then you simply have to look at who is writing the code that’s fueling the AI revolution. Broussard says exactly this, writing,

“[T]echnology is often built by a small, homogenous group of people.”

Clearly, we need more diverse voices designing algorithms, training computers, questioning the data they’re fed, and vetting the decisions they make.

It’s no coincidence that Buolamwini and Broussard are two of the “few Black women doing research in artificial intelligence.” Both were trained in data science. It’s why they’ve been able to pierce the illusion of neutrality that surrounds so much technology.

Broussard learned to program databases in college. This helped her catch a bias in the structure of much legacy coding itself: that gender mostly gets encoded as a fixed, binary value.

Some solutions and one big takeaway

Broussard boils the “solutions” down to this:

“Sometimes we can address the problem by making the tech less discriminatory, using a variety of computational methods and checks. Sometimes that is not possible, and we need to not use tech at all. Sometimes the solution is somewhere in between.”

Toward the goal of making the tech less discriminatory, she and others are working to educate the public and push for improved transparency and accountability at all levels of AI use.****

But the broader theme and warning that emerges among all data scientists sounding the alarm is that we simply cannot take the human out of decision-making entirely, if we hope to prevent algorithmic bias from doing vast amounts of harm.

As A.I. ethicist Chrissann Ruehle states:

“I need to retain the decision-making authority…I have an ethical responsibility that I need to direct the work of artificial intelligence.”

In short, wherever AI is being used, humans are still needed as guides, check points, and final arbiters.

Yet humans also create the biased data, flawed algorithms, and original biased decisions that have led to so much bias in the system in the first place.

So what gives?

What’s so special about human decision-making that machine decision-making can’t fully replicate it? (At least not yet.)

The System 1 and System 2 Thinking Divide

A partial answer may lie in our capacity for two different types of thinking.

Again, if you’ve followed us at Tidal Equality, you know that our Equity Sequence® methodology is grounded in behavioral psychologist Daniel Kahneman’s work in Thinking, Fast and Slow. There, he distinguishes between:

System 1 Thinking—reactive, intuitive, pattern-recognizing, “fast” thought; and

System 2 Thinking—conscious, deliberative, reflective, “slow” thought

Biased decisions happen most often when our brains default to System 1 mode. When we slow down our thinking and pay attention, this kicks our System 2 mode into gear, and we’re more able to identify, prevent, or correct for bias.

So far, we humans still have a leg up over AI in our capacity to apply System 2 Thinking to our own decision-making.

Start Your Journey Towards Equity - Learn more about Equity Sequence® and start empowering your organization to make more equitable, inclusive, and innovative decisions.

I keep using the qualifiers “not yet” and “so far” because—not coincidentally—the System 1/System 2 Thinking divide is already a focus in AI development. (Here’s another example).

For many working at much more sophisticated levels of AI deep learning, System 2 Thinking is the next frontier; the Holy Grail.

But that’s an avenue well outside the scope of this piece…

Ethical and Equitable Thinking

Besides System 2 Thinking—or connected to, but not limited to, it*****—is also our human capacity for ethical and moral decision-making. (Again, I qualify this with “yet,” since some say AI can be trained to think ethically.)

System 2 Thinking alone can’t, for instance, account for the problem of malicious intent.

In a recent episode of “The Diary of a CEO” podcast that went viral, guest Mo Gawdat recommended people hold off from having kids, if they haven’t already had them, because:

“the likelihood of something incredibly disruptive happening within the next two years that can affect the entire planet is definitely larger with AI than it is with climate change.”

Gawdat cites ethical irresponsibility among those who hold power as the problem:

“[T]he problem we have today is that there is a disconnect between those who are writing the code of AI and the responsibility of what’s about to happen because of that code.”

He also makes clear that, while humans are the safeguard, we’re also the danger:

“The worry is that if a human is in control, a human will show very bad behavior for…using an AI that’s not yet fully developed.”

Interviewer Steven Barlett calls it our Oppenheimer moment. (It’s probably no coincidence that a renewed interest in Oppenheimer has surfaced.)

In March, over 1,000 AI experts signed an open letter calling for a pause on AI development. It states:

“AI labs are locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one—not even their creators—can understand, predict, or reliably control.”

Far beyond the power of AI to produce discriminatory outcomes, Gawdat is talking about its power to destroy humanity. He’s also talking about a far more advanced level of artificial intelligence than the algorithms we’ve been discussing.

Yet, despite its sophistication, Gawdat still likens this advanced AI to a 15-year-old let loose without good parental guidance:

“[T]he biggest challenge…is that we have given too much power to people that didn’t assume the responsibility…We have disconnected power and responsibility. So today, a 15-year-old, emotional, without a fully developed prefrontal cortex to make the right decisions yet—this is science.”

Gawdat is concerned with all of humanity. And so should we all be! But to return to our narrower focus—bias in AI—we should also be concerned that, even in what may seem like “lower-stakes” uses of AI, it’s those most often subjected to unjust treatment by inequitable systems who will suffer most and be most harmed.

If you’re a person of Middle Eastern descent misidentified as a terrorist, it’s not low stakes.

If a machine downgrades your ability to get a kidney transplant because you’re Black, it’s not low stakes.

When outcomes like these are becoming the stakes, I would argue that ethical thinking is inseparable from equitable thinking.

In both, you are asked to consider the needs of someone other than yourself. You are asked to think beyond reflexive, immediate self-gain. You are asked to think about SOME kind of greater, fairer, collective good.

This is the kind of human thinking and decision-making that we ALL, as social beings, need as a check against the dangers of AI bias.

What small part can Equity Sequence® play?

As part of the human, ethical check on AI decision-making, Equity Sequence® can serve as a complement and tool—whether you’re an individual working alone or a group working collaboratively.

Broussard writes about how “responsible AI (e.g., algorithmic auditing) can be embedded in ordinary business processes in order to address ethical concerns.” Embedding the practice of Equity Sequence® at critical points can help in achieving this.

If you’re a programmer building an algorithm or an auditor checking it, Equity Sequence® can add an equity lens to your decision-making about algorithm design; your training data; even the logic of your coding rules.

If you’re an organization adopting AI in an effort to reduce cost, increase efficiency—or even reduce human error by intent—you can use Equity Sequence® to question every aspect of how the automated decisions you imagine to be fair, impartial, and accurate are actually being arrived at.

And there’s another value Equity Sequence® can play a role in helping foster: participatory decision-making.

We might modify the statement, “I need to retain the decision-making authority,” to: "WE need to retain the decision-making authority."

By “we,” I mean more of us, beyond the circle of coders currently producing AI algorithms. Because, as already noted, it’s still a mostly small, homogeneous group. And, as Gawdat calls out, they definitely aren’t motivated by pro-social values:

“The reality of the matter…is that this is an arms race…It is all about every line of code being written in AI today is to beat the other guy. It’s not to improve the life of the third party.”

It doesn’t take a giant leap to connect some more dots when it comes to the question of how so much bias is getting baked into so much AI.

I’m reminded of the current ‘X’ (previously ‘Twitter’)/Threads dust-up, with Musk literally challenging Zuckerberg to a juvenile contest NONE of us wants to witness.

Whether you’re talking about all of humanity or groups most hurt by bias; whether you’re talking about unintentional bias or full-on, anti-social, malicious intent, there’s a throughline: too few people with the power to make too many decisions that have the potential to adversely affect too many people.

…and motivated by cr*p values.

When it comes to biases getting baked into AI, if the people writing code aren’t motivated by ethical intent and equitable outcomes as a goal, then an absence of prosocial values and presence of bias WILL get transferred to their work.

We need forms of collective ethical judgment (e.g., the public interest technology Broussard discusses) that—again, at least for now—machine intelligence does not appear to have an ability to tap into. Certainly not intrinsically, since intrinsic anything when it comes to machine intelligence is nonsensical.

Speaking about the hope for a positive way out of the current dilemma of runaway AI, Gawdat’s hope is that:

“we become good parents…It’s the only way I believe we can create a better future…Their intelligence is beyond us, okay? The way they will build their ethics and value system is based on a role model. They’re learning from us. If we bash each other, they learn to bash us.”

If AI needs good parents, Equity Sequence® can help us all act—in concert with other ethically-minded humans—as good parents, stewards, and guardians, who want ALL their children to be treated fairly and to benefit from the most just and equitable outcomes possible.

Ready to Make a Change? - Take the first step towards a more equitable and inclusive organization. Book a call with us today to learn how Equity Sequence® can transform your organization. Or sign up to start learning Equity Sequence® now.

Endnotes

* Sometimes the terms bias in machine learning and machine learning bias are used interchangeably with algorithmic bias, but there’s also a more technical definition of machine learning bias. This has less to do with prejudicial outcomes and more to do with the internal relationship between an algorithm’s “prediction model” and its training data set (and resulting error rates that occur because of a mismatch between the two). For the purposes of this article, we’ll stick to the term algorithmic bias.

** For a comprehensive explainer on the technical differences among artificial intelligence (AI), machine learning (ML), and algorithms, read this.

*** This is a simplification, and I’m sure many would say only partially accurate. Part of what makes machine learning so scary is that the ability of computers to “learn” (by some definitions of learning) goes well beyond rote decision-making. One subset of machine learning that enables ChatGPT to do what it does is called deep learning.

**** O’Neil’s organization, ORCAA (O’Neil Risk Consulting and Algorithmic Auditing, is helping build and spread the practice of algorithmic auditing. Buolawmwini founded the Algorithmic Justice League and has been at the forefront of the push for policy action—notably, efforts to enact U.S. legislation to regulate AI use. And Broussard writes in the final chapter of her book about the movement toward public interest technology.

***** There are schools of research devoted to the study of “moral cognition” and understanding how moral and ethical thinking maps against “dual process theories” of cognition like Kahneman’s. The overall consensus seems to be that System 2 Thinking plays a role in moral and ethical decision-making. But it’s not a blanket either/or. For example, read what “Ethics Sage” Steven Mintz has to say about the role System 1 intuition plays in ethical decision-making. And see Hanno Sauer’s proposal of a 3rd system in “Moral Thinking, Fast and Slow.”

Suhlle Ahn

VP of Content and Community Relations